An open source full-size arcade cabinet which allows users to play a range of open source games. Obviously users could also add their own ROMs. Example list of open source games; https://osgameclones.com/

Category: Projects

Text-To-Speech Max Headroom and Majel Roddenberry

The fact that we don’t already have widely available open source tts executables for Max Headroom and Majel Roddenberry is perhaps the greatest failure of the artificial intelligence movement to date. Frankly I think it’s insane that this isn’t already a thing. Enter Vall-E which can create TTS models of someone based on just a… Continue reading Text-To-Speech Max Headroom and Majel Roddenberry

My New NAS Architecture: DIY Synology Running RAID 51

I have outgrown my two DS218+ Synology NAS boxes. These have served me well for longer than I can remember. I use these to store backups from all my servers and devices, as well as my media library and all my documents and projects. I have had these Synology boxes set up as a main… Continue reading My New NAS Architecture: DIY Synology Running RAID 51

Baking Gluten Free Bread

A lot of people have side effects after recovering from covid. I got lots of new allergies, including gluten, garlic, and onions. This has led to me basically cooking all my own food and rarely eating out. One of the challenges has been bread. Gluten free bread at the store can be great but it’s… Continue reading Baking Gluten Free Bread

Current Gear List

Note: I’m working on getting pictures of everything. New PC I got this Case because it fits in an Ikea Kallax shelf Motherboard RAM CPU / Cooler SSD Power Supply Video Card New NAS (x2) 2x R310 RAID Card SSD 3x 16TB WD Red Drives Working At Home Main Webcam 4K Converter Power Supply Webcam(For… Continue reading Current Gear List

Ask AI to name a personalized custom fabrication company

Create brand names for a distributed fabrication company specializing in making all sorts of personalized custom things to order using the following sorts of techniques;-Laser cut metal for things like business cards-3D printed personalized chotchkies and office desk ornaments-Custom tee shirts and other clothingThe firm will focus on designs for LGBTQ people which highlight queer… Continue reading Ask AI to name a personalized custom fabrication company

Assume This Is Probably A Scam: Here’s How To Check

Every August, an army of scammers emerge to prey on those looking for Burning Man tickets. As a regional chair of Burners Without Borders who has navigated this process many times, I decided to write this short piece about how to identify scammers and how to avoid being ripped off. The most important thing to… Continue reading Assume This Is Probably A Scam: Here’s How To Check

Web App LAMP Server Setup 2022

Install LAMP Software sudo apt update && sudo apt upgrade sudo apt install fail2ban apache2 mariadb-server php php-{cli,bcmath,bz2,curl,intl,gd,mbstring,mysql,zip} sudo mysql_secure_installation Install Certbot For SSL To install SSL, use Certbot. Install Resilio-Sync Install Resilio Sync for Backup transportation and versioning; https://help.resilio.com/hc/en-us/articles/206178924-Installing-Sync-package-on-Linux Add Scripts For Backups And Permissions Various scripts and files (That go in various places)… Continue reading Web App LAMP Server Setup 2022

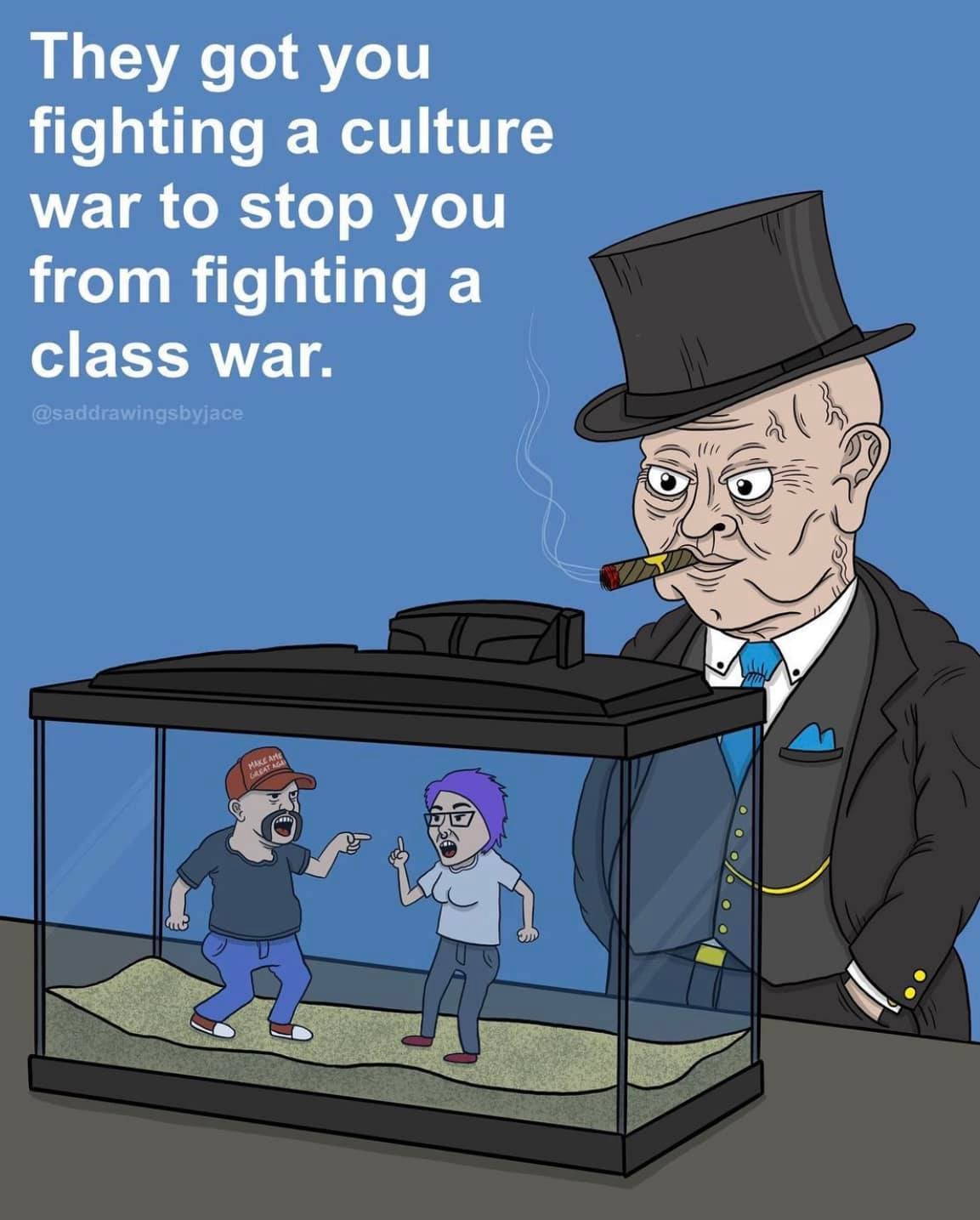

Ask AI to Analyze a Meme About Culture War vs Class War

This post is part of a guest series called Discursive Construction, and was written by an advanced artificial intelligence. The bold at the beginning is the prompt, and the rest was written by the AI. If you’d like to support this project, please buy me a coffee. Advanced AI was invented by soviet communists to help humanity … Continue reading Ask AI to Analyze a Meme About Culture War vs Class War

Ask AI to Invent New Characters From William Gibson’s Sprawl Series

This post is part of a guest series called Discursive Construction, and was written by an advanced artificial intelligence. The bold at the beginning is the prompt, and the rest was written by the AI. If you’d like to support this project, please buy me a coffee. For this post, AI also created the featured photos based… Continue reading Ask AI to Invent New Characters From William Gibson’s Sprawl Series